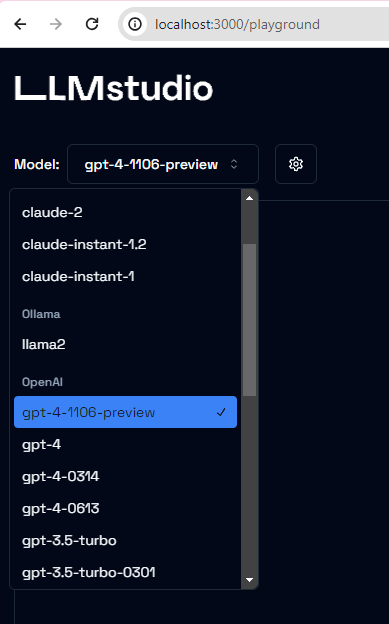

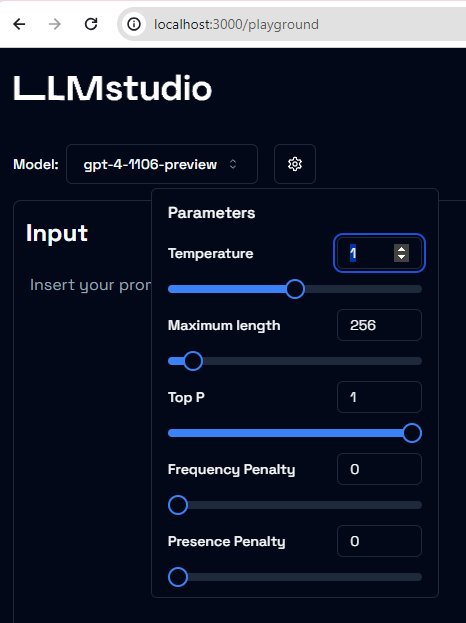

Creating the ideal prompt can be the key to transforming a less than average outcome into one that is remarkably relevant. Discovering the right prompt often involves numerous revisions and a method of trial and error. I felt the need to refer to my past attempts, modifications made to prompts, LLM models I tried and other LLM settings as well relative cost of these combinations. There are a few options to help with this : promptflow, langsmith, LLMStudio and others.

I tried LLMStudio and promptflow. This article is about LLMStudio.

If you are installing LLMStudio on Windows, use WSL. Here are the steps :

- Create a folder on your machine.

- Create .env file here with following content:

OPENAI_API_KEY="sk-api_key"

ANTHROPIC_API_KEY="sk-api_key"- Enter wsl and create a new python environment with conda

(base) PS c:\code\llmstudio> wsl

(base) ash@DESKTOP:/mnt/d/code/llmstudio$ conda activate lmstdLLMStudio runs on Bun. Bun is a javascript runtime like Node.

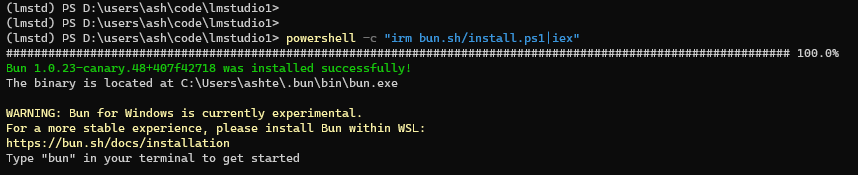

- Instructions for installing bun on windows :

https://bun.sh/docs/installation#windows

sudo apt-get install unzip

powershell -c "irm bun.sh/install.ps1|iex"

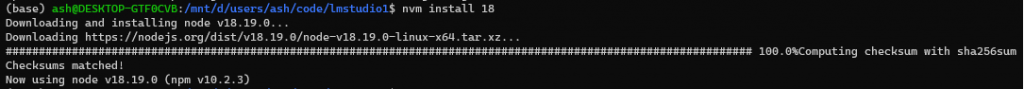

- Install Node v18, if you run into ReferenceError: Request is not defined at Object.<anonymous> (/home/ash/miniconda3/lib/python3.11/site-packages/llmstudio/ui/node_modules/next/dist/server/web/spec-extension/request.js:28:27)

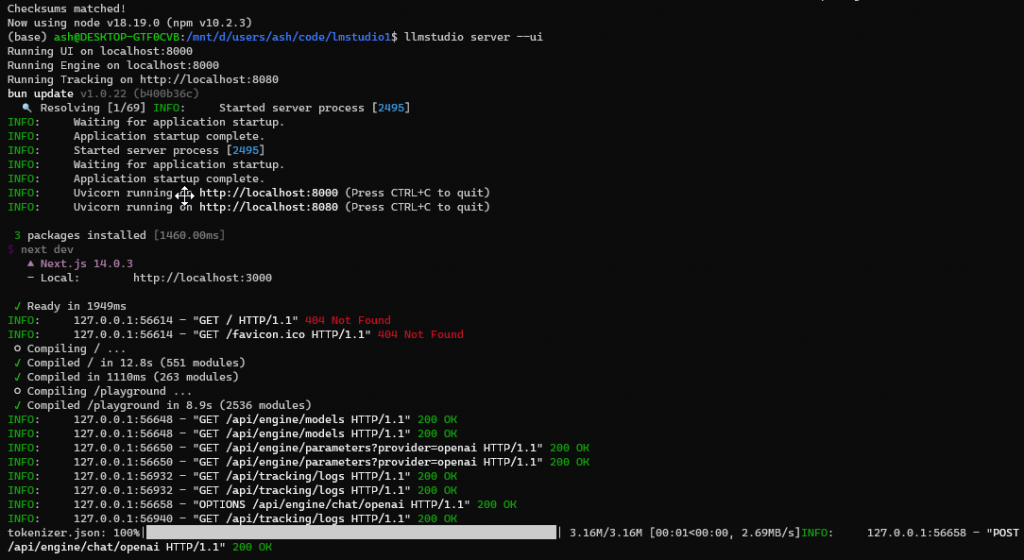

- Start LLMStudio

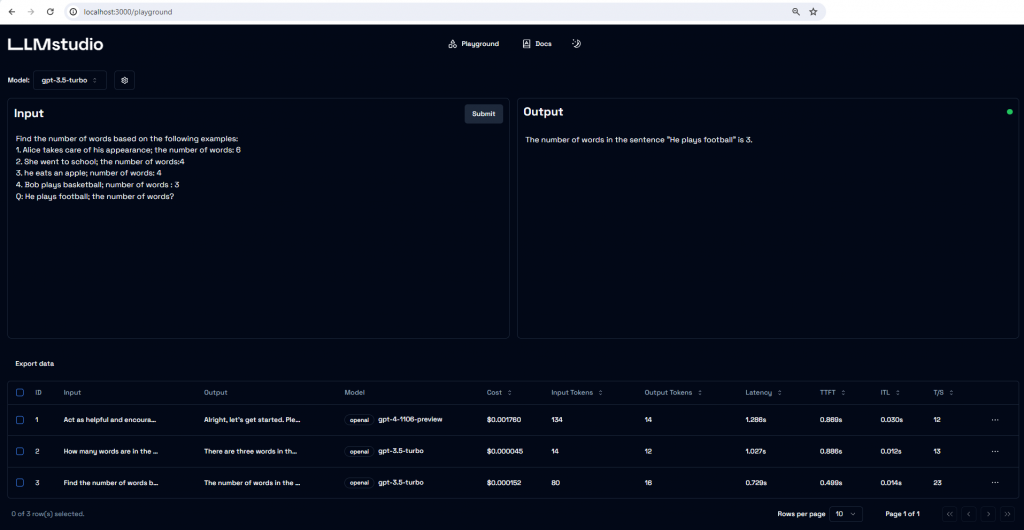

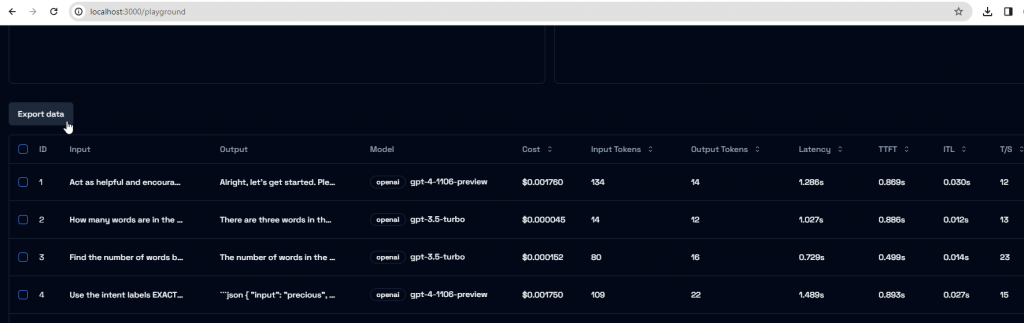

Export the execution data as csv file by clicking on [Export data] button. This data includes the input, output, LLM model, Input and Output tokens as well as cost.

References

What is LLMstudio?

LLMstudio by TensorOps

LLM Studio Quickstart

Install Bun for Windows

Setup Bun JS in windows using WSL and VS code

NextJS – ReferenceError: Request is not defined